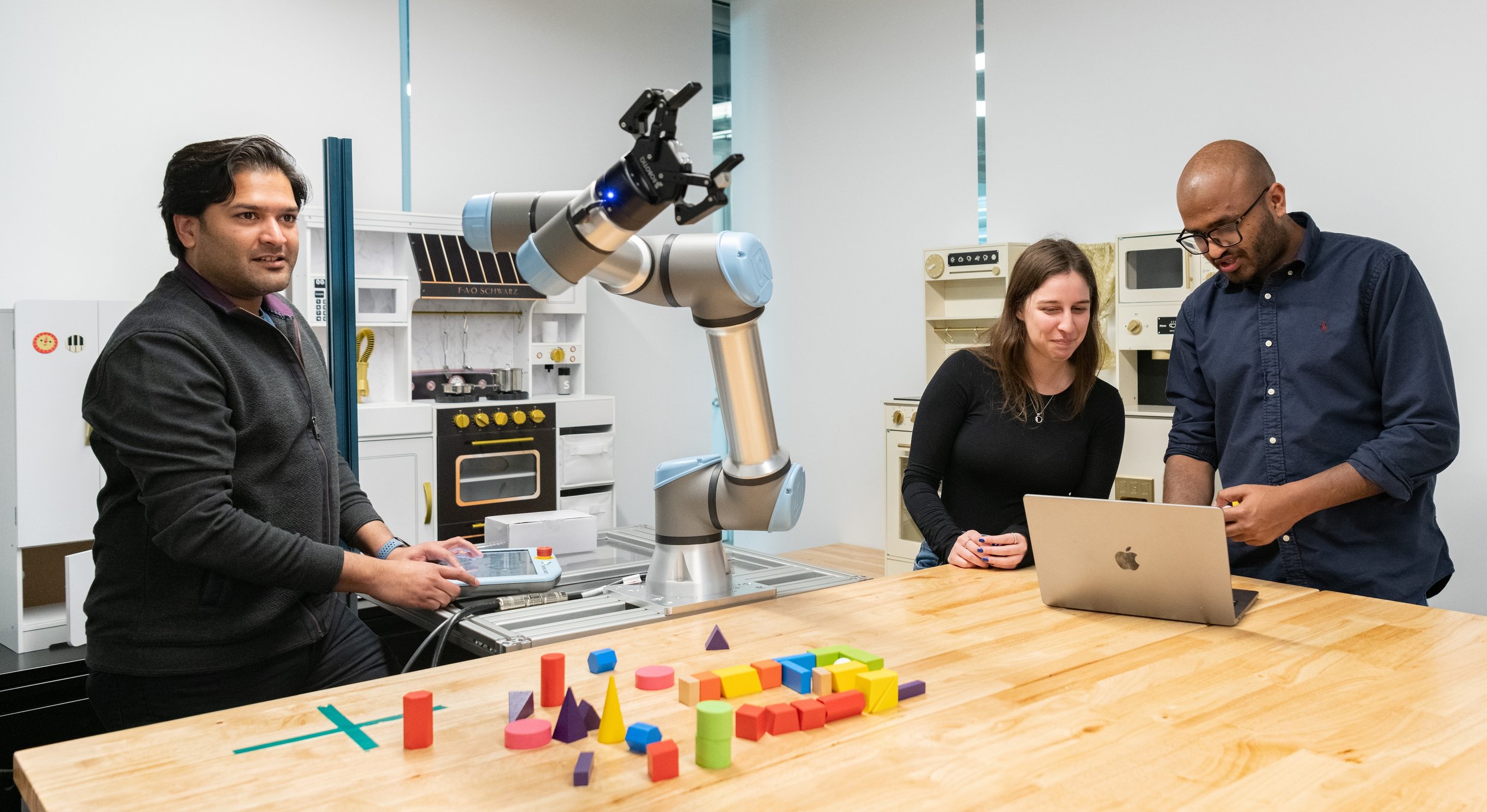

Abhinav Shrivastava

Associate Professor of Computer Science

University of Maryland

Areas of Expertise: Computer Vision and Machine Learning

Abhinav Shrivastava is an associate professor of computer science with an appointment in the University of Maryland Institute for Advanced Computer Studies. Shrivastava’s research focuses on artificial intelligence—particularly as it relates to computer vision, machine learning, and robotics. He is also interested in graphics, natural language processing, human-computer interaction, systems, data-mining, and cognitive and computational neuroscience.

-

Walmer, M., Suri, S., Gupta, K., & Shrivastava, A. (2023). Teaching Matters: Investigating the Role of Supervision in Vision Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

Abstract: Vision Transformers (ViTs) have gained significant popularity in recent years and have proliferated into many applications. However, it is not well explored how varied their behavior is under different learning paradigms. We compare ViTs trained through different methods of supervision, and show that they learn a diverse range of behaviors in terms of their attention, representations, and downstream performance. We also discover ViT behaviors that are consistent across supervision, including the emergence of Offset Local Attention Heads. These are self-attention heads which attend to a token adjacent to the current token with a fixed directional offset, a phenomenon that to the best of our knowledge has not been highlighted in any prior work. Through our analysis, we show that ViTs are highly flexible and learn to process local and global information in different orders depending on their training method. We find that contrastive self-supervised methods learn features that are competitive with explicitly supervised features, and they can even be superior for part-level tasks. We also find that the representations of reconstruction-based models show non-trivial similarity to contrastive self-supervised models. Finally, we show how the "best" layer for a given task varies by both supervision method and task, further demonstrating the differing order of information processing in ViTs.

-

Gwilliam, M., & Shrivastava, A. (2023). Beyond Supervised vs. Unsupervised: Representative Benchmarking and Analysis of Image Representation Learning. ArXiv, arXiv:2206.08347.

Abstract: By leveraging contrastive learning, clustering, and other pretext tasks, unsupervised methods for learning image representations have reached impressive results on standard benchmarks. The result has been a crowded field - many methods with substantially different implementations yield results that seem nearly identical on popular benchmarks, such as linear evaluation on ImageNet. However, a single result does not tell the whole story. In this paper, we compare methods using performance-based benchmarks such as linear evaluation, nearest neighbor classification, and clustering for several different datasets, demonstrating the lack of a clear frontrunner within the current state-of-the-art. In contrast to prior work that performs only supervised vs. unsupervised comparison, we compare several different unsupervised methods against each other. To enrich this comparison, we analyze embeddings with measurements such as uniformity, tolerance, and centered kernel alignment (CKA), and propose two new metrics of our own: nearest neighbor graph similarity and linear prediction overlap. We reveal through our analysis that in isolation, single popular methods should not be treated as though they represent the field as a whole, and that future work ought to consider how to leverage the complimentary nature of these methods. We also leverage CKA to provide a framework to robustly quantify augmentation invariance, and provide a reminder that certain types of invariance will be undesirable for downstream tasks.

-

Walmer, M., Sikka, K., Sur, I., Shrivastava, A., & Jha, S. (2022). Dual-Key Multimodal Backdoors for Visual Question Answering. ArXiv preprint arXiv: 2112.07668.

Abstract: The success of deep learning has enabled advances in multimodal tasks that require non-trivial fusion of multiple input domains. Although multimodal models have shown potential in many problems, their increased complexity makes them more vulnerable to attacks. A Backdoor (or Trojan) attack is a class of security vulnerability wherein an attacker embeds a malicious secret behavior into a network (e.g. targeted misclassification) that is activated when an attacker-specified trigger is added to an input. In this work, we show that multimodal networks are vulnerable to a novel type of attack that we refer to as Dual-Key Multimodal Backdoors. This attack exploits the complex fusion mechanisms used by state-of-the-art networks to embed backdoors that are both effective and stealthy. Instead of using a single trigger, the proposed attack embeds a trigger in each of the input modalities and activates the malicious behavior only when both the triggers are present. We present an extensive study of multimodal backdoors on the Visual Question Answering (VQA) task with multiple architectures and visual feature backbones. A major challenge in embedding backdoors in VQA models is that most models use visual features extracted from a fixed pretrained object detector. This is challenging for the attacker as the detector can distort or ignore the visual trigger entirely, which leads to models where backdoors are over-reliant on the language trigger. We tackle this problem by proposing a visual trigger optimization strategy designed for pretrained object detectors. Through this method, we create Dual-Key Backdoors with over a 98% attack success rate while only poisoning 1% of the training data. Finally, we release TrojVQA, a large collection of clean and trojan VQA models to enable research in defending against multimodal backdoors.

-

Wang, J., Wu, Z., Chen, J., Han, X., Shrivastava, A., Lim, S. N., & Jiang, Y. G. (2023). ObjectFormer for Image Manipulation Detection and Localization. ArXiv, arXiv-2203.14681.

Abstract: Recent advances in image editing techniques have posed serious challenges to the trustworthiness of multimedia data, which drives the research of image tampering detection. In this paper, we propose ObjectFormer to detect and localize image manipulations. To capture subtle manipulation traces that are no longer visible in the RGB domain, we extract high-frequency features of the images and combine them with RGB features as multimodal patch embeddings. Additionally, we use a set of learnable object prototypes as mid-level representations to model the object-level consistencies among different regions, which are further used to refine patch embeddings to capture the patch-level consistencies. We conduct extensive experiments on various datasets and the results verify the effectiveness of the proposed method, outperforming state-of-the-art tampering detection and localization methods.

-

Zhou, P., Yu, N., Wu, Z., Davis, L. S., Shrivastava, A., & Lim, S. N. (2021). Deep Video Inpainting Detection. ArXiv preprint arXiv:2 101.11080.

Abstract: This paper studies video inpainting detection, which localizes an inpainted region in a video both spatially and temporally. In particular, we introduce VIDNet, Video Inpainting Detection Network, which contains a two-stream encoder-decoder architecture with attention module. To reveal artifacts encoded in compression, VIDNet additionally takes in Error Level Analysis frames to augment RGB frames, producing multimodal features at different levels with an encoder. Exploring spatial and temporal relationships, these features are further decoded by a Convolutional LSTM to predict masks of inpainted regions. In addition, when detecting whether a pixel is inpainted or not, we present a quad-directional local attention module that borrows information from its surrounding pixels from four directions. Extensive experiments are conducted to validate our approach. We demonstrate, among other things, that VIDNet not only outperforms by clear margins alternative inpainting detection methods but also generalizes well on novel videos that are unseen during training.

-

Girish, S., Suri, S., Rambhatla, S., & Shrivastava, A. (2021). Towards Discovery and Attribution of Open-world GAN Generated Images. ICCV.

Abstract: With the recent progress in Generative Adversarial Networks (GANs), it is imperative for media and visual forensics to develop detectors for identifying and attributing images to the model generating them. Existing works have shown to attribute GAN-generated images with high accuracy. However, they work in a closed set scenario and fail to generalize to GANs unseen during train time. Therefore, they are not scalable with a steady influx of new GANs. We present an iterative algorithm for discovering images generated from previously unseen GANs by exploiting the fact that all GANs leave distinct fingerprints on their generated images. Our algorithm consists of multiple components involving network training, out-of-distribution detection, clustering, merge and refine steps. We provide extensive experiments to show that our algorithm discovers unseen GANs with high accuracy and also generalizes to GANs trained on unseen real datasets. Our experiments demonstrate the effectiveness of our approach to discover new GANs and can be used in an open-world setup.