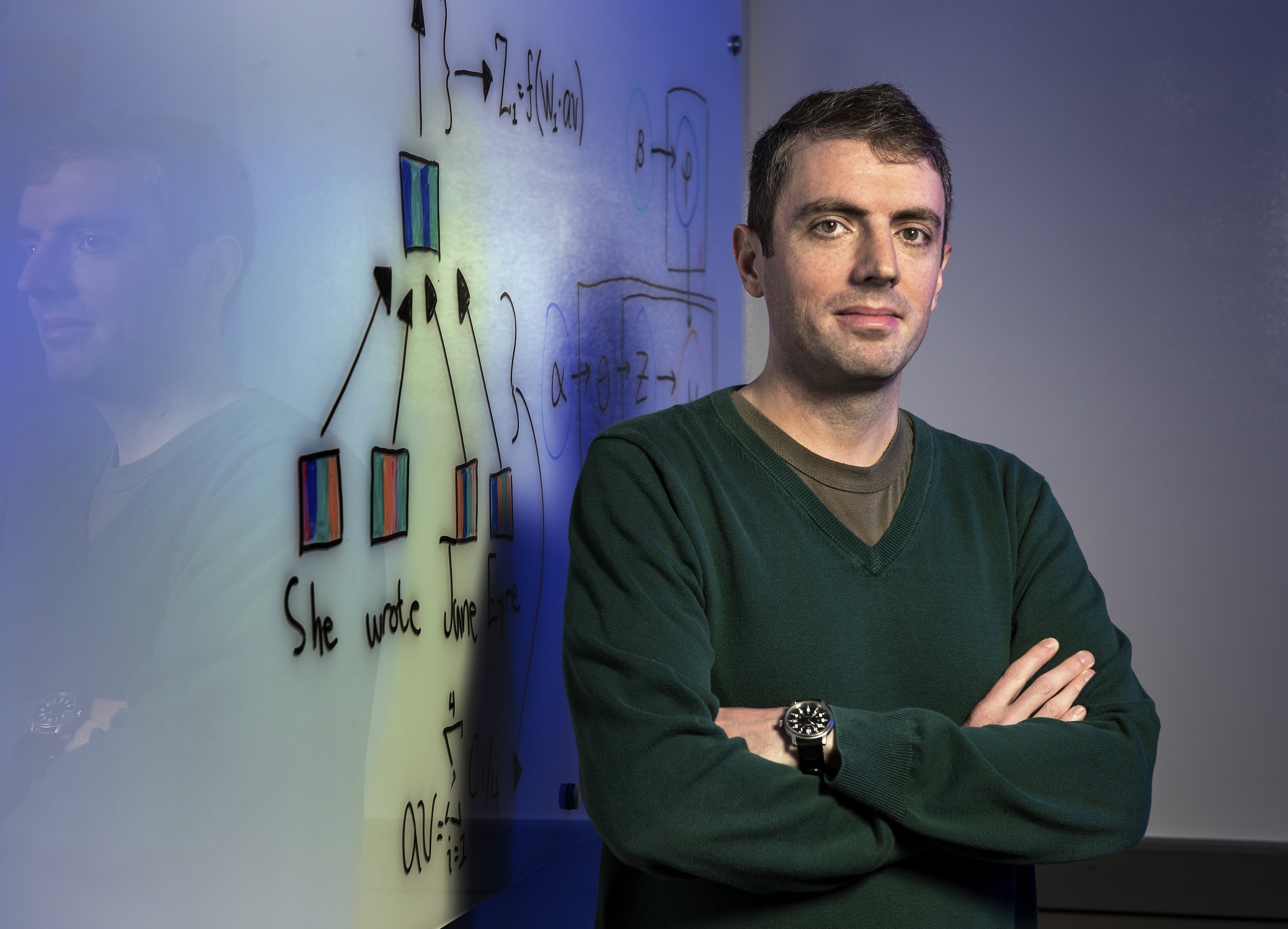

Jordan Boyd-Graber

Professor of Computer Science

University of Maryland

Jordan Boyd-Graber is a professor of computer science with appointments in the Maryland Language Science Center, College of Information Studies, and the University of Maryland Institute for Advanced Computer Studies where he is also a member of the Computational Linguistics and Information Processing Lab. His research focuses on making machine learning more useful, more interpretable, and able to interact and learn from humans.

Area of Expertise: Calibration of AI

-

Si, C., Zhao, C., Min, S., & Boyd-Graber, J. (2022). Re-Examining Calibration: The Case of Question Answering. Findings of Empirical Methods in Natural Language Processing.

Abstract: For users to trust model predictions, they need to understand model outputs, particularly their confidence—calibration aims to adjust (calibrate) models’ confidence to match expected accuracy. We argue that the traditional calibration evaluation does not promote effective calibrations: for example, it can encourage always assigning a mediocre confidence score to all predictions, which does not help users distinguish correct predictions from wrong ones. Building on those observations, we propose a new calibration metric, MACROCE, that better captures whether the model assigns low confidence to wrong predictions and high confidence to correct predictions. Focusing on the practical application of open-domain question answering, we examine conventional calibration methods applied on the widely-used retrieverreader pipeline, all of which do not bring significant gains under our new MACROCE metric. Toward better calibration, we propose a new calibration method (CONSCAL) that uses not just final model predictions but whether multiple model checkpoints make consistent predictions. Altogether, we provide an alternative view of calibration along with a new metric, re-evaluation of existing calibration methods on our metric, and proposal for a more effective calibration method.

-

Han, H., Carpuat, M., & Boyd-Graber, J. (2022). SimQA: Detecting Simultaneous MT Errors through Word-by-Word Question Answering. Empirical Methods in Natural Language Processing.

Abstract: Detractors of neural machine translation admit that while its translations are fluent, it sometimes gets key facts wrong. This is particularly important in simultaneous interpretation where translations have to be provided as fast as possible: before a sentence is complete. Yet, evaluations of simultaneous machine translation (SIMULMT) fail to capture if systems correctly translate the most salient elements of a question: people, places, and dates. To address this problem, we introduce a downstream word-by-word question answering evaluation task (SIMQA): given a source language question, translate the question word by word into the target language, and answer as soon as possible. SIMQA jointly measures whether the SIMULMT models translate the question quickly and accurately, and can reveal shortcomings in existing neural systems— hallucinating or omitting facts.

-

Feng, S., & Boyd-Graber, J. (2022). Learning to Explain Selectively: A Case Study on Question Answering. Empirical Methods in Natural Language Processing.

Abstract: Explanations promise to bridge the gap between humans and AI, yet it remains difficult to achieve consistent improvement in AI-augmented human decision making. The usefulness of AI explanations depends on many factors, and always showing the same type of explanation in all cases is suboptimal—so is relying on heuristics to adapt explanations for each scenario. We propose learning to explain”selectively”: for each decision that the user makes, we use a model to choose the best explanation from a set of candidates and update this model with feedback to optimize human performance. We experiment on a question answering task, Quizbowl, and show that selective explanations improve human performance for both experts and crowdworkers.

-

Eisenschlos, J. M., Dhingra, B., Bulian, J., Börschinger, B., & Boyd-Graber, J. (2021). Fool Me Twice: Entailment from Wikipedia Gamification. North American Association of Computational Linguistics.

Abstract: We release FOOLMETWICE (FM2 for short), a large dataset of challenging entailment pairs collected through a fun multi-player game. Gamification encourages adversarial examples, drastically lowering the number of examples that can be solved using “shortcuts'' compared to other entailment datasets. Players are presented with two tasks. The first task asks the player to write a plausible claim based on the evidence from a Wikipedia page. The second one shows two plausible claims written by other players, one of which is false, and the goal is to identify it before the time runs out. Players “pay” to see clues retrieved from the evidence pool: the more evidence the player needs, the harder the claim. Game-play between motivated players leads to diverse strategies for crafting claims, such as temporal inference and diverting to unrelated evidence, and results in higher quality data for the entailment and evidence retrieval tasks. We open source the dataset and game code.

-

Hoyle, A., Goel, P., Peskov, D., Hian-Cheong, A., Boyd-Graber, J., & Resnik, P. (2021). Is Automated Topic Model Evaluation Broken?: The Incoherence of Coherence. Neural Information Processing Systems.

Abstract: Topic model evaluation, like evaluation of other unsupervised methods, can be contentious. However, the field has coalesced around automated estimates of topic coherence, which rely on the frequency of word co-occurrences in a reference corpus. Contemporary neural topic models surpass classical ones according to these metrics. At the same time, topic model evaluation suffers from a validation gap: automated coherence, developed for classical models, has not been validated using human experimentation for neural models. In addition, a meta-analysis of topic modeling literature reveals a substantial standardization gap in automated topic modeling benchmarks. To address the validation gap, we compare automated coherence with the two most widely accepted human judgment tasks: topic rating and word intrusion. To address the standardization gap, we systematically evaluate a dominant classical model and two state-of-the-art neural models on two commonly used datasets. Automated evaluations declare a winning model when corresponding human evaluations do not, calling into question the validity of fully automatic evaluations independent of human judgments.